承接上一篇文章 - 在家搭建和測試 Kubernetes (2), 今天目標要安裝 Load Balancer。

因為在家搭建K8S,即俗稱 Bare Metal Kubernetes,是沒有包含Load Balancer或Ingress的,縱使目前的K8S版本已包含Kube-Proxy了,沒有了Load Balancer或Ingress的話網絡服務就不能開放使用,例如架設好的網站也不能輕易經網址訪問到。

目前比較多人用的免費方案有MetalLB:

MetalLB hooks into your Kubernetes cluster, and provides a network load-balancer implementation. In short, it allows you to create Kubernetes services of type

MetalLB, bare metal load-balancer for Kubernetes (universe.tf)LoadBalancerin clusters that don’t run on a cloud provider, and thus cannot simply hook into paid products to provide load balancers.

安裝指引的步驟上尚算簡單,但真正用起來卻不斷碰壁,主要問題是Service沒能獲取到External IP,一直保持在pending的狀態。網上也有很多解決方法但大多數都不成功,直至搵到這一篇文章(MetalLB: The Solution for External IPs in Kubernetes with LoadBalancer — Learn More! | by Gabriel Garbes | Medium)才解決到問題,我猜其他方法(通常靠設定 configmap 中的 config)只適用於舊版本,還有就是 L2Advertisement 這一個設置。

Setup Log

Install MetalLB

During the installation, I confirm that the firewalld will cause the problem. Therefore, let's disable the firewalld permently for smooth operation:

systemctl stop firewalld systemctl disable firewalld

Take the snapshot in vmware for the control-plain VM before proceed.

According to MetalLB installation instruction, MetalLB, bare metal load-balancer for Kubernetes (universe.tf). Please noted that we need to specify the namespace explicitly:

# Preparation - Enable StrictARP in kube-proxy: kubectl edit configmap -n kube-system kube-proxy # Location around line #40, change the strictARP from 'false' to 'true' # Install with Helm helm repo add metallb https://metallb.github.io/metallb helm install metallb metallb/metallb --create-namespace --namespace metallb-system

Check if it is installed and running successfully:

# Confirm metallb is running: kubectl get all -n metallb-system NAME READY STATUS RESTARTS AGE pod/metallb-controller-5f9bb77dcd-m6k9k 1/1 Running 0 149m pod/metallb-speaker-5xd2d 4/4 Running 0 149m pod/metallb-speaker-d7rwb 4/4 Running 0 149m pod/metallb-speaker-nqjwx 4/4 Running 0 149m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/metallb-webhook-service ClusterIP 10.102.254.168 <none> 443/TCP 149m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/metallb-speaker 3 3 3 3 3 kubernetes.io/os=linux 149m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/metallb-controller 1/1 1 1 149m NAME DESIRED CURRENT READY AGE replicaset.apps/metallb-controller-5f9bb77dcd 1 1 1 149m

Setting up Address Pool and Layer2 (Important!)

There are lot of instructions telling you to create/modify the configmap - config for specifying the Address Pool. However it does not work. It is possible that those instruction only suitable for the older version, not the latest one. Anyway, the following instuction works for MetalLB v0.13.12:

Create a YAML file, e.g.: metallb-post-setup.yaml

apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: first-pool namespace: metallb-system spec: addresses: - 192.168.11.201-192.168.11.230 --- apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: example namespace: metallb-system spec: ipAddressPools: - first-pool

Apply it:

kubectl apply -f metallb-post-setup.yaml

Test with Nginx service

# Create nginx deployment kubectl create deploy nginx --image nginx # Create a service, expose the port 80, and using LoadBalancer (i.e. MetalLB) kubectl expose deploy nginx --port 80 --type LoadBalancer # Check if the service is running and the external IP is obtained kubectl get svc

If it is success:

# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18h nginx LoadBalancer 10.109.170.157 192.168.11.202 80:31967/TCP 4s

External IP is obtained as expected. When open the url (http://192.168.11.202/) from browser, the Nginx welcome page is shown.

Therefore, the installation is success!

Let's clean it up:

kubectl delete svc/nginx deployment/nginx

We can deploy a Nginx service with 2 replicas for test (e.g. nginx-test2.yml)

apiVersion: v1

kind: Service

metadata:

name: nginx-test2

spec:

selector:

app: nginx

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-test2

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

# Deploy kubectl apply -f nginx-test2.yml kubectl get deployment nginx-test2 -o wide kubectl get svc # Check, clean up if success kubectl delete -f nginx-test2.yml

Update the K8S Dashboard service to use Load Balancer

As we have downloaded the deployment YAML (by manifest), let‘s modify the service block, from NodePort (if set), to LoadBalancer).

Here is the final version of the service block in recommended.yml (~ line #30):

- Remove any NodeType or NodePort

- Add

type: LoadBalancer

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: LoadBalancer

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

Apply the change and will see an external IP is assigned:

[root@k8s-ctrl ~]# kubectl apply -f recommended.yaml [root@k8s-ctrl ~]# kubectl get svc kubernetes-dashboard -o wide -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes-dashboard LoadBalancer 10.96.97.3 192.168.11.201 443:31269/TCP 149m k8s-app=kubernetes-dashboard

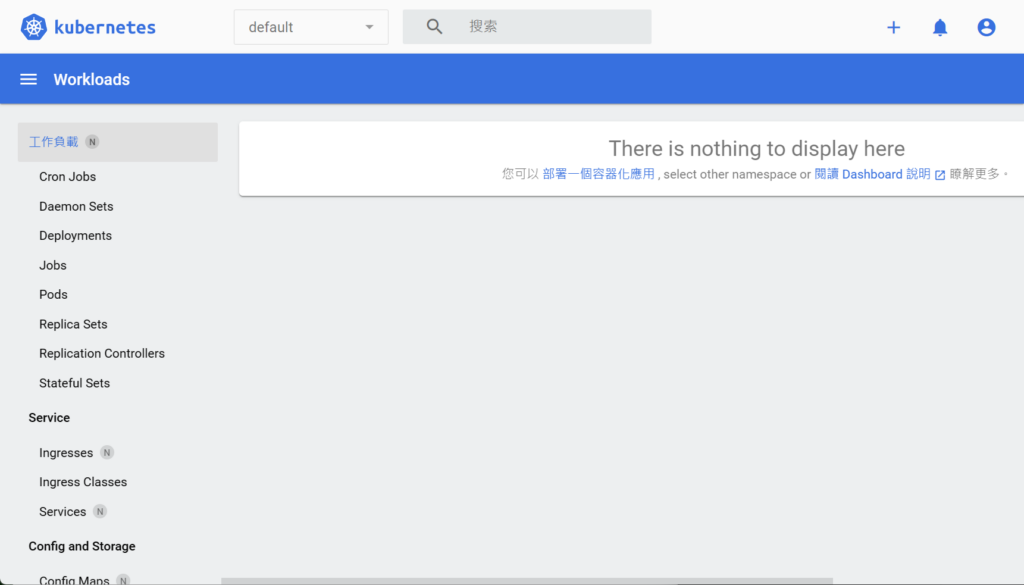

Check from browser, URL: https://192.168.11.201

Create the admin user (ONLY FOR TESTING, Not for Production) and login, see: dashboard/docs/user/access-control/creating-sample-user.md at master · kubernetes/dashboard · GitHub

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard EOF

cat <<EOF | kubectl apply -f - apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard EOF

Once the admin user is created, generate a token:

kubectl -n kubernetes-dashboard create token admin-user

Keep the generated token and use for dashboard login.

(Use the same command to generate the token if it is lost)